How AI ‘Eurovision Song Contest’ winners Uncanny Valley found the human-machine sweet spot

Producer and sonic technologist Justin Shave and Head of Music and Innovation Charlton Hill give us a look under the hood of the AI that co-created their winning track, Beautiful The World.

Uncanny Valley Team from video Beautiful The World

Hosted by Dutch broadcaster VPRO, this week’s Eurovision-inspired AI Song Contest brought AI generative music into the limelight. Australian entrant Uncanny Valley won the public’s heart with Beautiful The World, a pop hit laced with whimsical lyrics, sporadic melodies and synthesised sounds of Australian native animals.

“The way we approached it specifically was to say: how do we mimic the creative process inside a brain?” explains Justin Shave, Uncanny Valley’s award-winning producer and sonic technologist. The music-tech collective used neural networks and an algorithmic process to achieve this ambitious goal. The first two neural networks were trained on melodic information sourced from 200 MIDI versions of Eurovision songs; another wrote lyrics from roughly 60 thousand lines of English Eurovision songs. Then, an algorithmic process linked melodies with lyrics, and a final machine-learning process made instruments – from koalas and kookaburras.

Uncanny Valley is a well-rounded collective of musicians, sound engineers and sonic technologists who are connected with academics and data scientists by partnerships strategist, Caroline Pegram. UNSW’s Dr Brendan Wright and Dr Oliver Bown brought their expertise to the development of Beautiful The World, with the help of RMIT’s Dr Alexandra Uitdenbogerd. From speaking with Justin Shave and Charlton Hill, the company’s Head of Music and Innovation, it seems that collaboration, curiosity and playfulness are at the heart of the team’s ethos.

Inside the artificial mind

Uncanny Valley’s first neural net gathered melodic information sourced from melodies, chords, basslines, and in some cases “melodic hooks that they have in Eurovision songs,” says Shave. They first had to transpose the Eurovision MIDI tracks so they were all in the same key.

The collective experimented with neural nets, landing on a modified SampleRNN model architecture. For those in the know, SampleRNN is a popular neural audio generation model used by Holly Herndon for her AI accomplice, Spawn.

SampleRNN can generate up to 30 seconds of melody as fragments. This particular network is optimal for producing music, Shave says, because the generative algorithm can learn from the musical ideas in the fragments. This model allows for “continuation”, a process where AI extends a song based on what it’s learnt.

A scanner darkly

Next, the team used another neutral net: “a modified GPT-2, which is a different type of architecture trained on text data-set,” says Shave. This is the same system that makers OpenAI had once branded “too dangerous” to release because of its ability to create convincing fake news, though it seems much more at home in the creative arts.

The team trained GPT-2 using previous Eurovision lyrics to generate new lines, which soon saw the team sorting through at least 500 AI-generated lyrics. And some of the lyrics weren’t exactly sunshine and light.

“There was some pretty dark stuff that we obviously didn’t want to let past the keeper,” says Hill. “It wasn’t quite ‘Kill All Humans’, but it was verging on some pretty negative connotations.” Shave laughs, adding, “But it was trained on Eurovision lyrics, so that wasn’t our fault!”

Putting words to music

RMIT’s Dr Alexandra Uitdenbogerd, a practising musician and computer scientist, developed Uncanny Valley’s third process which matched the AI-generated lyrics to melodies.

It’s a step the team had specifically wanted to automate after having to painstakingly do it manually for an AI generated Christmas Carol they produced called Santa Claus is Coming to Little Lord Moses. And, on listening to the festive track versus Beautiful The World, it’s clear that the algorithm had the upper hand.

Uitdenbogerd’s process compared syllables in lines with note numbers and lengths, and found solutions using a best-fit algorithm. This wasn’t without its bugs, though, because the human process of recognising a good ‘scan’ was not implemented in the code. Shave seems confident that this will be possible next time, asserting that it will be possible to train neural nets to do it.

Regardless, the team stuck firmly to the melodic/lyrical combos that the algorithm spat out and did not change either notes nor note lengths within a particular selected phrase.

These lyrical and melodic pairings were then sung by Sinsy, a Japanese open-source, voice synthesis system, allowing the collective to cherry-pick their favourite passages.

The team was looking for “hooky, catchy Eurovision earworms,” says Shave. And this approach to producing Beautiful The World was, perhaps counterintuitively, quite similar to how he’d produce a human-made song. In the same way that a producer can tease ideas from a human artist, Shave explains that he was able to do the same with AI-generated music.

Smarter synths

The competition was technically a measure of songwriting prowess, and the Uncanny Valley Team has been very open about the fact that the track was produced by award-winning (human) producer Justin Shave.

However, the team wanted another challenge. “I wanted to get a component of audio production in the AI, because I didn’t just want to produce a song that the AI had written,” Shave explains.

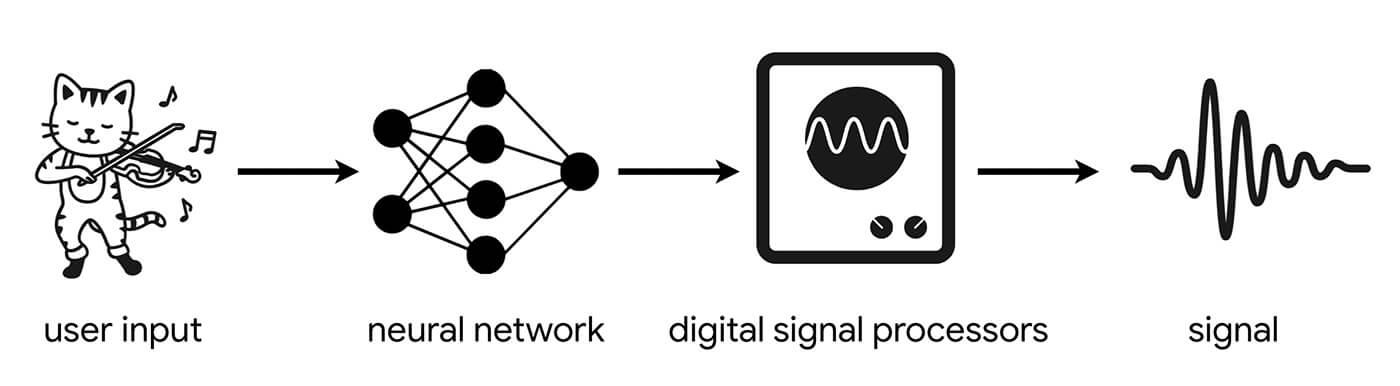

Before the contest, the collective had been working with Google’s Creative Lab in Sydney. There, they experimented with a new system called DDSP – differential digital signal processing – created by LA-based open-source library, Magenta. “[DDSP is] a machine-learning technique that you actually feed audio into. It’s able to do a style transfer of one sound into another. So, for instance, you could train it on a virtuosic violin player and then sing a melody line. It would straight away, without being translated into MIDI, transfer the style of your singing into a violin,” explains Shave.

Taking a side step from virtuoso violinists, Uncanny Valley opted to use the DDSP to manipulate recordings of koalas, Tasmanian devils and kookaburras. “We trained the neural net to listen to those and to use them as an instrument,” explains Shave. The collective chose these particular animals to provide a message of hope in light of the native wildlife populations that were largely decimated by Australia’s recent mega-fires.

You can hear the results in the lead synth line at 1.15 in the Beautiful The World video, at the end of this article.

Happy accidents

After deciphering the melodies and arrangement for Beautiful The World, Uncanny Valley brought in vocalist Antigone. “[They] sing what the AI sang, which wasn’t easy,” says Shave. If you listen to the track, you’ll notice there’s a wide tonal range and the melody’s direction is almost impossible to predict. The team wanted to leave it that way, though, as Hill explains, “It’s one of the hallmarks of AI: its music is made from melodies and lyrics that you probably wouldn’t sing as a human. That’s what gives it its jerky, jumpy around feeling.”

“I actually like that – that the melody’s surprising. I love happy accidents. I’m a firm believer that every good pop music production has something in it that’s surprising, which piques your brain to go: that’s new, but it’s kind of the same,” continues Shave.

Hill says, “My role came into force around the construction of the lyrical content given my history as a songwriter.” He points out AI’s speed when it comes to stimulating ideas for lyrics. “[The AI] was incredibly informative in terms of giving you a storyline.” Surprisingly, the neural net provided lyrics that alluded to a structure. “It wasn’t nonsensical,” he adds.

Apart from the slightly sporadic AI melody, it proved to be a bug in RMIT’s code that made the lyrics feel familiar.

“It was kind of like its own little idea loop,” says Hill. The lyrics would cycle, tacking parts of a sentence back onto the end of a phrase. For example, in the line: ‘The world is beautiful, the world.‘ This bug mimics a writing technique often used by human songwriters. “It has its own linguistic style,” Hill says. “You could argue that that was a nuance that was artistic in itself.”

“It doesn’t necessarily understand itself in its sentiments yet,” he adds, explaining that the neural network is using a map of Eurovision linguistics and is trying to put it back together again. “I think we found it quite charming, its turn of phrase was quite likeable.”

Creativity and AI

“Songwriting is about capturing spontaneity,” says Hill, explaining that AI can assist an artist by pushing them into that ‘a-ha’ moment where creative ideas start to spark. “[It can assist by] putting people into that special place that generates fantastic human spontaneity – I think that that’s the best thing that AI is going to bring to the songwriting process.”

After pointing out that Beautiful The World’s lyrics sound almost child-like, Hill says that a youthful, curious approach to creativity is the essence of songwriting. “How can you write a song if you’re editing or criticising yourself at every turn? You have to flow and then come back to it, like how an editor would for a novel, and ask what happened. Because I couldn’t tell when I was in the moment.” He believes AI is an excellent tool for artists to overcome rumination, overthinking and decision paralysis. “It can be bonkers,” Hill says.

Does not compute

That said, not all the accidents were necessarily happy ones. Uncanny Valley experimented with Spleeter, a source separation tool created by Deezer. “It uses machine-learning to extract vocals and stems from finished tracks. It’s a karaoke dream,” says Shave. “We thought, ‘Could we extract all these lyrics from all these audio versions of the Eurovision songs and put them through a SampleRNN neural net like how Holly Herndon does?” In the name of experimentation, they gave it their best shot.

“It ended up sounding like a rabble of 500 millennials arguing in a dancehall,” says Shave, breaking into laughter.

“That’s half the creative process as well: figuring out what AI methods you’re going to use to actually make the creation,” he says. “We feel that the discussion right now has gone way beyond just outputting raw music data outputs,” Hill says. “It’s saying: ‘Thanks for the idea, AI. Now let’s turn it into a hit.’ And that’s the sweet spot in terms of the collaboration with AI and the co-creation between human and machine.”

An uncanny future

Beautiful The World lives on in new forms through the company’s memu.live generative music streaming project which endlessly remixes the AI Song Contest’s winning track.

Next, Uncanny Valley plans to focus attention on emotional data in audio, an area that’s become a key interest for the collective.

Shave outlines an interest in sonic memes, or “echemes” as he calls them. “They’re intelligent bytes of audio that understand everything about themselves using some feature extraction techniques,” explains Shave, “They’re figuring out what key they’re in, what rhythm they might be using, their own tempo.” They’re also working on systems that will be able to predict human emotions elicited from music, referencing Musiio, a Singapore-based AI company using feature extraction machine-learning techniques to automatically tag music library tracks with genre, tempo and emotion.

This might all sound advanced, but when it comes to fears about whether AI will replace human songwriters, Shave is reassuring. “We always say AI is about collaboration, it’s never about replacement.”

Find out more about Uncanny Valley at uncannyvalley.com.au.

Read more about music tech developments in artificial intelligence on MusicTech.

Additional reporting by Will Betts.